This is the last one in the series of articles that go through the architecture of a small game engine for a small game, The Jelly Reef. The very last thing that was implemented into the game was the graphics. The art team in the first weeks of development was looking for the right look for the game. In mean time the our designer was looking for the right feel, we decided give this top priority and started writing the gameplay code and all the infrastructure. The graphics would wait up until we know what the game will be and look like.

With four talented drawing artist on the team, we knew that the game will be hand painted for the most part and that we will use sprite sheets for all the animations. You would expect this to lead to a 2D rendering system. After all, the math in all previous articles was 2D and Vector2D's were all over place. But at the same time we wanted to have some depth to our top down perspective and considered using parallax effect to achieve that. Using parallax would have some drawbacks and might be difficult to tweak or draw art for. Instead we opted against it, so the rendering system is actually a 3D one. There is a 3D projective camera and 3D triangles are being send to the GPU so the depth is inherent.

The goal was to have a system that can render static images in layers that would make up the level, some animated sprites for the object in the game and lots of bubbles. All this without killing performance, of course. Almost everything is drawn alpha blended, but some things like the GUI overlay and seaweed would require special treatment. Additionally we also wanted to be able to scale and tint all the sprites.

In the core of our graphics system is the RenderJob which the only thing that our render manager can render. From its fields it's very clear how we implemented the needed functionality.

public enum RenderPass { AlphaTested,

AlphaBlended,

Overlay }

// All the data need for rendering a object on screen

// A class rather than a struct to enable reference storing

public class RenderJob : IComparable<RenderJob>

{

// In which pass should the geometry be rendered

public RenderPass RenderPass;

// Used for depth sorting in alpha-blended jobs

public float Height;

// The vertices in world or model coordinates

public VertexPositionTexture[] Vertices;

// Indices of the vertices

public int[] Indices;

// If vertices are in model coordinates a suitable transform

// matrix should be submited, if vertices are in world

// coordinates the identity matrix should do fine

public Matrix WorldMatrix;

// A texture to draw this geometry with

public Texture2D Texture;

// Allows the texture to be tinted with a solid color

public Vector4 Tint;

// Allows a render job to disable the caustics

public bool DisableCaustics;

// Create new render job

public RenderJob()

{

Tint = new Vector4(1, 1, 1, 1);

}

// Compares two render jobs

public int CompareTo(RenderJob other)

{

if (Height > other.Height)

return 1;

if (Height < other.Height)

return -1;

return 0; // ==

}

}

The rendering in our game is handled by the rendering manager and all entities that would like to be rendered need to implement the IRenderable inteface.

public interface IRenderable

{

IEnumerable<RenderJob> GetJobs();

}

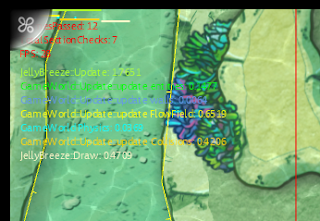

The manager simply goes over all the entities and fills up three lists, one for each render pass. The list with Alpha blended jobs gets sorted by height, from the lowest to the highest. Then the lists are rendered in the order they were declared in the enum aboove.

Each level can have up to five layers and they are rendered as any other alpha blended job. The height, and hence the parallaxing amount, can be set for each layer. The size is automatically determinate to fit the level size with some clearing for the parallaxing. The level layers can be drawn as layers in Photoshop. In the game, their alignment at the the middle of the screen would be the same as while drawing. This means that parallaxing does not interfere with gameplay.

The game take place underwater. I really wanted for us to get that feeling early on by adding water caustics and started looking for real-time solutions on the subject. In the end none of the options looked convincing enough and many were too computationally expensive. We quickly opted for a precalculated sequence of images that tile in both, space and time. The image is applied in the shader and the strength of the effect can be tweaked per level. The shader also scales the effect with depth, adding another subtle depth cue.

This wraps up the articles series. I hope people will find them useful. If anyone needs more info, you can drop a comment below the article.

A new version of the engine is already in the making. It's based on a somewhat different concept, written in C++ and trying to be as platform independent as possible.